🔍 Diagnose the Reason for Low CTR

1️⃣ Check Creative Fatigue (the most common cause)

What to look for:

- Frequency > 2.5–3

- CTR dropping week-over-week

- CPM rising

- Relevance score / ad quality dropping

Why it happens:

Audiences have seen the ad too many times → scroll past → CTR sinks.

Fix:

- Refresh creative: new images, new videos, new headlines

- Test new formats: reels, carousel, student testimonial videos

2️⃣ Analyze Audience Relevance

Check:

- Is the same audience being targeted for too long?

- Has audience become too broad/narrow?

- Lookalike quality degraded?

- Are interest groups still relevant?

Fixes:

- Refresh audiences every 30–45 days

- Add new lookalikes:

- High-intent website visitors

- Brochure downloaders

- Completed application lookalike (best)

- Expand age/location slightly

- Remove saturated audiences

3️⃣ Check Ad Placement Issues

Sometimes CTR drops because:

- Ads are running heavily on low-performing placements (Audience Network, In-stream video, Right column)

Diagnosis:

- Check breakdowns → placement inside Ads Manager.

Fixes:

- Turn off poor placements

- Prioritize: Feeds, Reels, Stories

4️⃣ Evaluate the Hook + Message Relevance

Your message may no longer match student motivation.

Questions to ask:

- Is the ad addressing what students care about?

- Is the headline strong?

- Is the creative talking about features instead of benefits?

- Is the CTA too generic?

Fixes (Education example):

- Highlight admissions open

- Showcase placements

- Show campus facilities

- Use student faces

- Add urgency: “Last few seats”, “Application closes soon”

5️⃣ Review Ad Quality Ranking

Three metrics:

- Quality ranking

- Engagement ranking

- Conversion ranking

If these fall below average → Facebook reduces reach → CTR drops.

Fix:

- Completely new creative + new angle

- Better copywriting

- Use video testimonials or campus reels (high engagement formats)

6️⃣ Check Competitor Activity / Seasonal Shifts

During admission seasons, competition rises → CPM goes up → CTR may drop.

Diagnosis:

- Sudden spike in CPM

- Same creative performing worse at peak season

Fixes:

- Increase bids or budgets slightly

- Improve creative competitiveness

- Run more high-attention formats (video, reels)

7️⃣ Inspect Landing Page Preview Issues

For lead forms, check Instant Form Preview.

Potential problems:

- Form not loading

- Redirect warning

- Slow load for website landing pages

- Message mismatch between ad & form headline

Fixes:

- Shorten form

- Align headline with ad

- Improve creative → form relevance

🔍 Fixing the CTR Drop (Action Plan)

- Create 3–4 new creatives:

- 1 video (campus tour / student testimonial)

- 1 static (USP highlights)

- 1 carousel (programs offered)

- 1 reel-style short video

- New hooks

- Stronger CTA

- Stronger program-specific messaging

- “DSU UG Admissions 2026 Now Open – Apply Today!”

- “100% Placement Support | Top Ranked University in Bengaluru”

- Duplicate ad set

- Add fresh interest clusters

- Add new lookalikes

- Exclude old engaged users

- Audience Network

- Right column

- In-stream

- Facebook Feed

- Instagram Feed

- Stories

- Reels

- Student testimonials

- Campus visuals

- Before-after type career messaging

- Ranking/placements credibility signals

- CTR trend

- CPM

- CPC

- Relevance scores

- Lead quality

🔍 What’s your approach to A/B testing on Meta?

- Test one variable at a time

- Use separate ad sets for clean testing

- Run for at least 7–10 days

- Compare CTR, CPC, CPL, and lead quality

- Consider cultural nuance for international audiences

- Scale winning creative to new regions

🔍 If CTR drops suddenly, what do you do?

- Check frequency (creative fatigue)

- Refresh creatives

- Check audience overlap

- Remove low-performing placements

- Improve copy hook

- Review CPC and CPM (competition)

🔥 Walk me through your Meta Ads campaign structure.

- Clearly defined objective (Leads, Sales, Traffic, Engagement).

- A/B separated campaigns for Prospecting vs. Retargeting.

- CBO for scale, ABO for testing.

- Warm vs. cold audiences separated.

- Different ad sets for:

- Lookalikes (1%, 2–5%)

- Interest clusters (3–4 tightly related interests)

- Broad (no interests — best for scale)

- Placements → Advantage+ placements for efficiency.

- 3–4 creatives per ad set: 1 video, 1 static, 1 carousel, 1 UGC.

- Creative tailored to funnel stage (TOFU → storytelling, BOFU → USP + CTA).

- Regular refresh every 10–14 days depending on creative fatigue.

🔥 What is your process for diagnosing a drop in performance?

- CPM ↑ → Audience or competition issue

- CTR ↓ → Creative or messaging issue

- CPC ↑ → Relevance or auction overlap

- CVR ↓ → Landing page, offer, or lead form friction

- CPL ↑ → Combination of the above

- Age

- Gender

- Placement

- Country

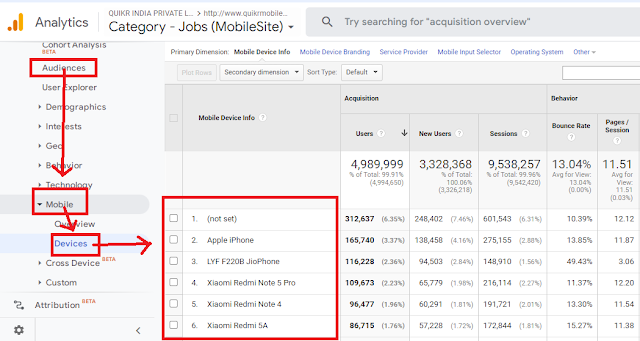

- Device

- CTR low → Refresh creatives, new hooks, new formats

- CPC high → Broaden audience, reduce overlap

- CPM high → Test new interest clusters / switch to broad

- CVR low → Improve landing page speed + clarity

- Leads low → Remove unnecessary form fields

🔥 How do you approach scaling Meta Ads profitably?

- Increase budget by 20–30% every 48 hours to avoid resetting the learning phase.

- Turn winning ad sets into CBO for more efficient delivery.

- Duplicate winners into higher budgets if scaling aggressively.

- Add new lookalike ranges (1%, 3%, 5%)

- Add broad ad sets (No interest targeting)

- Launch new creatives to reduce fatigue

- Expand into new countries / languages

- Add new placements

- Use Advantage+ Audience for algorithm-driven reach

🔥 How do you test creatives in Meta Ads?

- Test 3–5 hooks on the same video/body copy

- Choose the best based on 3-sec view %, CTR

- Video vs. Static vs. Carousel

- Test thumb-stoppers & first 3 seconds

- Emotional angle

- Pain-point angle

- Product-benefit

- Social proof

- Urgency/scholarship angle (for education)

- Take winners → Refresh with new variations

- Take losers → Study why — improve lines, visuals, CTAs

🔥 What metrics do you prioritize while optimizing?

- CPM

- CTR

- Thumb-stop rate

- Add-to-cart / View content

- Frequency

- Hook retention

- CPC

- Landing page view rate

- Time on site

- Lead quality indicators

- Conversion rate

- Cost per lead / sale

- ROAS

- Attribution by 1-day click

🔥 What’s your strategy for retargeting on Meta Ads?

- Website visitors

- Video viewers (50%+)

- Engaged users

- Creative: Social proof + benefits + strong CTA

- Added to cart

- Initiated checkout

- Opened lead form but didn’t submit

- Creative: Testimonials, FAQs, objection handling

- Abandoned forms

- Repeated checkout users

🔥 How do you improve lead quality?

- Use higher-intent lead forms

- Ask qualifying questions

- Retarget video engagers instead of random cold traffic

- Exclude junk audiences (freebie seekers)

- Create ROI-focused lookalikes:

- Completed lead form

- High-quality leads

- Enrolled students OR high-value buyers

🔥 What is your approach to attribution in Meta Ads?

- 7-day click for longer journeys

- UTMs in GA4 for cross-platform comparison

- Offline conversions / CRM match to verify lead-to-enrollment or sale

- Break-even point vs. blended CAC

🔥 How do you reduce CPL or CPA?

- New hooks, better thumb-stoppers

- UGC ads + testimonial creatives

- Shorter, sharper ad copy

- Moving to broad targeting

- Breaking large interest groups into tight clusters

- Updating lookalike seeds

- Improve landing page speed

- Use conversion API

- Remove 3–4 unnecessary form fields

- Improve lead form flow

- Shorter windows

- Stronger BOFU messaging

🔥How do you identify and fix a drop in CTR?

- Creative fatigue – check frequency and quality ranking.

- Audience saturation – ensure the targeting isn't stale.

- Placement performance – some placements may be dragging CTR down.

- Relevance issues – messaging might no longer match audience intent.

- Competitive pressure – CPM increases could indicate the season is crowded.

🔥How do you measure lead quality?

- Lead → Application conversion rate

- Lead → Counseling attended rate

- Program interest relevance

- Region-wise conversion trends

- CRM scoring (if available)